EarthQube

Here I am diverging a bit from the usual I guess. So at this point I am still working at the Remote Sensing and Image Analysis department at the Technical University of Berlin. The usual thing for me is to write a paper to submit to a remote sensing, machine learning, neural networks or even a computer vision journal or conference. But wait the title says database conference!

Yeah. In this post, I am writing a paper for a database conference because we are collaborating with the database people from upstairs! Cross paper writing is always good just like cross-training. You do not always want to weight lifts. You want to achieve an overall fitness. You want to run, swim, jump rope and even climb. So I am mixing it up.

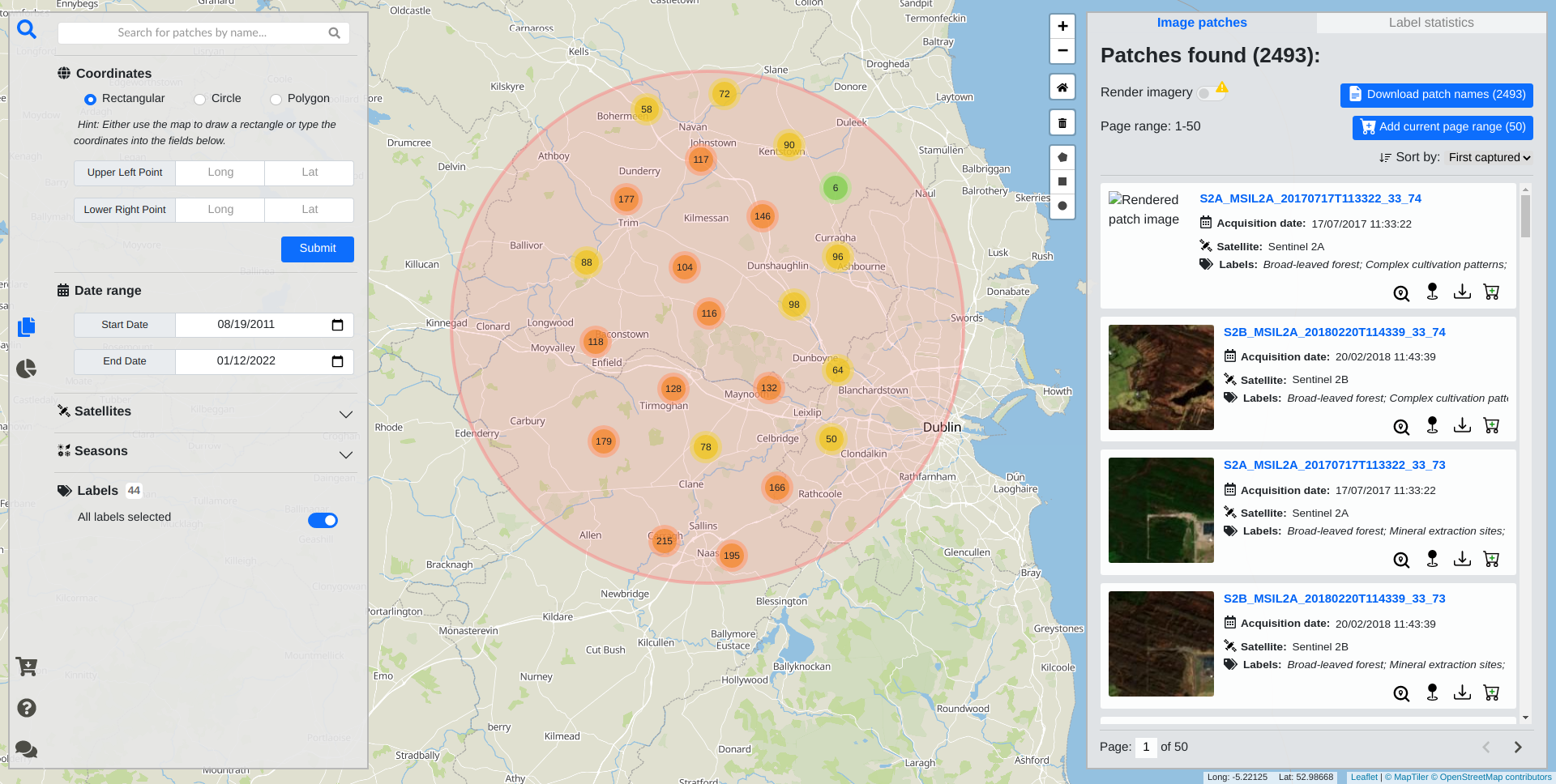

Our department has this dataset BigEarthNet that is pretty popular in the remote sensing world. It is downloaded many times, and it is among the Tensorflow Datasets and all that. But it is kinda hard to explore the dataset because it consists of about 600k images each having multiple bands and represented as tif files. So yeah, the dataset is created just like almost every other dataset for training neural networks. However, since there is a lot of images in the dataset, it was probably somehow interesting to the Database Systems and Information Management Group at the university. So one of the students created a portal for his thesis for exploring the dataset in a map interface.

I think what he has built for a thesis is pretty good. It had a Spring Boot as backend, MongoDB as database and as frontend… A single javascript file with 6000 lines, a very long single HTML file and a very long CSS file. I will come to this later, give me a second. My task was to integrate a content-based image retrieval approach for fast similarity search in satellite image archives to the portal. Our department had a paper published on content-based image retrieval, so why not to integrate it to the portal? If you do not know what content-based image retrieval is, it is when a model tries to retrieve similar images to a query image without any metadata. So a model is trained learning the semantic content of the images in the training set. It learns to map each image to a 128 bit hash code which represents the image content. When a query image comes, the hashcode of the query image is compared to every pre-computed hash code in the database and the ones with the lowest Hamming distance are returned. So I added that to the portal, and we wrote a paper about it. We called the system EarthQube. Here is the paper that we wrote: Satellite Image Search in AgoraEO. Unfortunately neither the Earthqube itself nor the code for the project is currently public. However, here is the video that I have taken for the VLDB 2022 submission. Enjoy!

Alright. I am back at the single file kingdom of frontend. I talked to the guy who has written the frontend. He did not have enough time for it, and in the beginning thought that it was just gonna be a couple calls to the backend and all that. I mean I do not know how he thought it was just gonna be a couple of calls but not having time argument was valid. Because at the university, you do not get a lot of time and support to write a full stack application in your thesis. Another point is, it was hard to even setup the project. The README included 21 steps to set the project up in text. In plain English.

It was hard to even get it up and running. So, I created a micro-service architecture; dockerized everything;

added an Nginx reverse proxy; added Vite.js support for bundling, building and development server capabilities.

I split the Javascript code into different modules. I used esmodules when doing that; wrote imports and all that.

This was hard because there was ton of stuff calling each other and you did not know what is coming from where.

But at the end it is worth it. Now, I can setup and run the whole application by doing docker-compose up.

Until next time!