Can I call my self a scientist now?

I wrote my Bachelor Thesis in the department of Remote Sensing and Image Analysis at the Technical University of Berlin. When I was studying for my Bachelor degree (2017-2020), deep learning was shining more than almost any other field in computer science. Starting in 2012 with the ImageNet paper (I know deep learning is older, chill), deep learning received a lot of attraction in the literature and subsequently in the industry. I also got interested in deep learning as it was amazing to see how capable they were when it comes to recognition and perception tasks.

So in my 3rd semester, I took a computer vision class. I did not learn much there other than some camera angles, triangulation, denoising filters, blurring filters etc. So I decided to write my Bachelor thesis somewhere where I can work with deep learning and learn Tensorflow, Pytorch and whatever is being used to do deep learning. So I looked at what the computer vision department has to offer, and it was mostly 3D stuff, cameras and all that. I went to talk to the professor about writing a face detection system in videos but he rejected it because it was too practical and not novel enough. Even though I was at the top of the computer vision class in the year that I have taken it, the professor was not really interested in working with me. As years at the university passed, I figured that thesis writing system sucks at the Technical University of Berlin (maybe not only in Berlin rather overall in Germany.)

The Technical University of Berlin accepts tons of people to its computer science undergraduate program. In my year, it was about 800 people. As far as I know, like half of these people drop out. In spite of that, since everybody has to write a thesis in the undergraduate program, there are a lot of people who are constantly looking for a thesis supervisor. So it is pretty hard to find a supervisor for your thesis. There are simply not enough people at the university. There are three solutions that I see here:

hire more Phd students

accept less students

not every undergraduate student has to write a thesis

I do not see any of this happening. So it sucks, and nobody does anything about it. I know people who looked for a thesis supervisor for literally a year. A YEAR. Unbelievable. And this is not their fault at all.

So anyways, I found out that there is a Remote Sensing and Image Analysis department at the university. And they basically do the same things as the computer vision department but on satellite data. Awesome. That is what I want. Actually the remote sensing department was almost solely working with deep learning. Even better. I got a topic from the remote sensing department, and started working my thesis. I completed my thesis a couple of months later, and learned Tensorflow and more Python and image analysis tools etc. along the way. So it was pretty cool to be able to write deep learning. Ah, I also learned Vim while writing the thesis, and I use Vim every day.

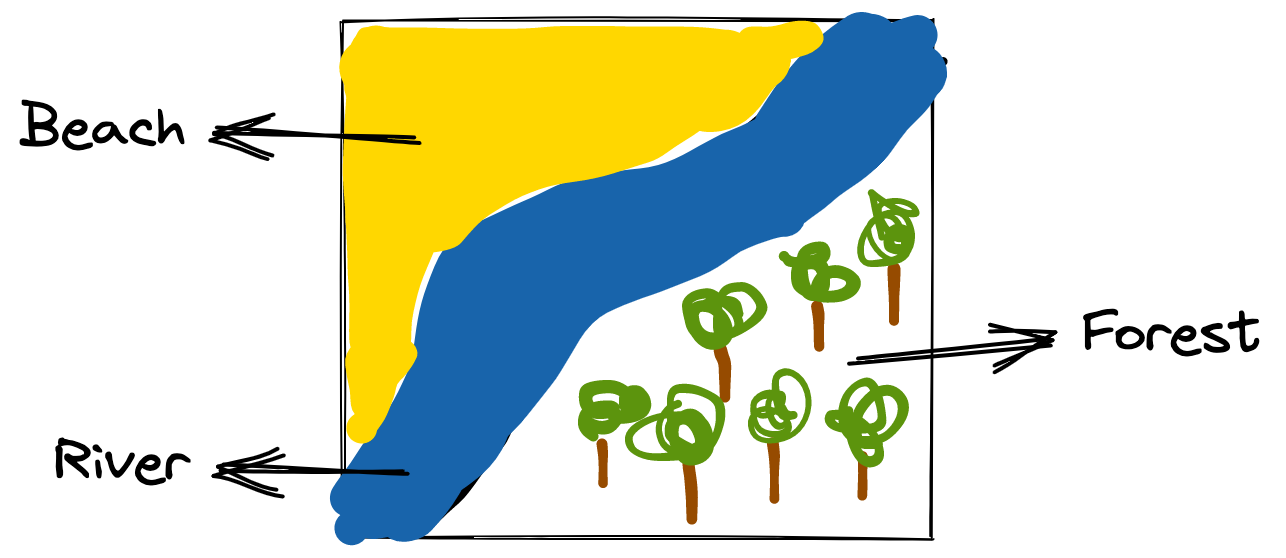

My topic was Collaborative Learning Models for Classification of Remote Sensing Images with Noisy Labels. The thing is we have these satellite images with multiple labels. So the satellite image below includes three different labels: Beach, River and Forest. Because the image contains them. There are hundreds of thousands of these images, and deep learning models use these images to understand the semantic content of images and make predictions about unseen images.

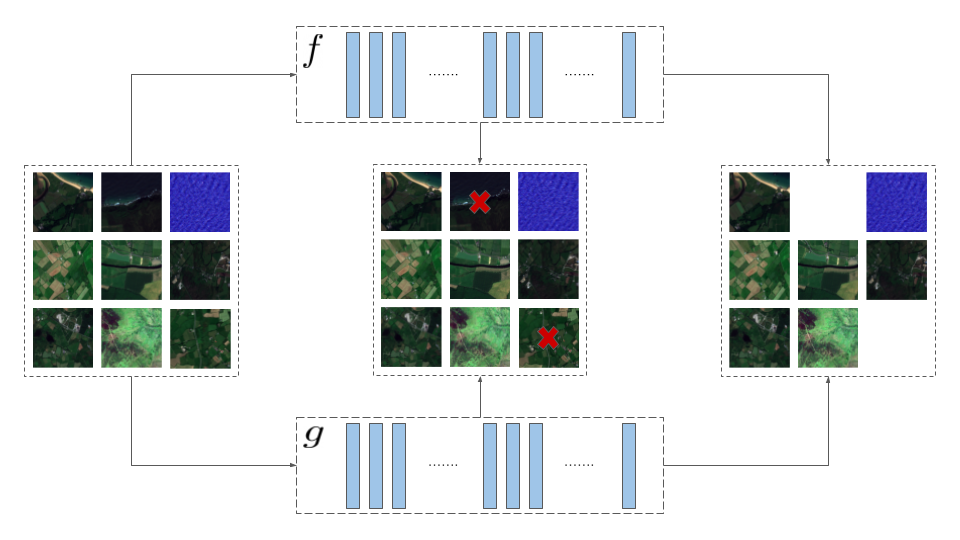

However, sometimes some images contain wrong, missing or extra labels, which we call noise. For example, the labels for the image above are: Airport, Beach, River and Forest. In this case there is an extra label that is actually not in the image. Another case maybe like this: Beach and Forest. In this case the label River is missing. These two cases can also happen at the same time: Airport, Beach, Forest. Depending on the rate of the noise in the images, neural networks are misled by it. My task was to write a collaborative network architecture where two neural networks train on the same data attempting to learn different features of the data, and eventually avoiding the negative effects of noise in the training. They would change information while training and help each other learn cleaner samples.

If you want a better (more scientific) explanation and want to learn more about it, check out the following paper: A Consensual Collaborative Learning Method for Remote Sensing Image Classification Under Noisy Multi-Labels.

You can find the code under the following Gitlab repository: CCML

Oh yeah, where was I? After I completed the thesis, we wrote a paper about the topic (see the link that I had just given), and it was published in the conference of IEEE ICIP 2021.

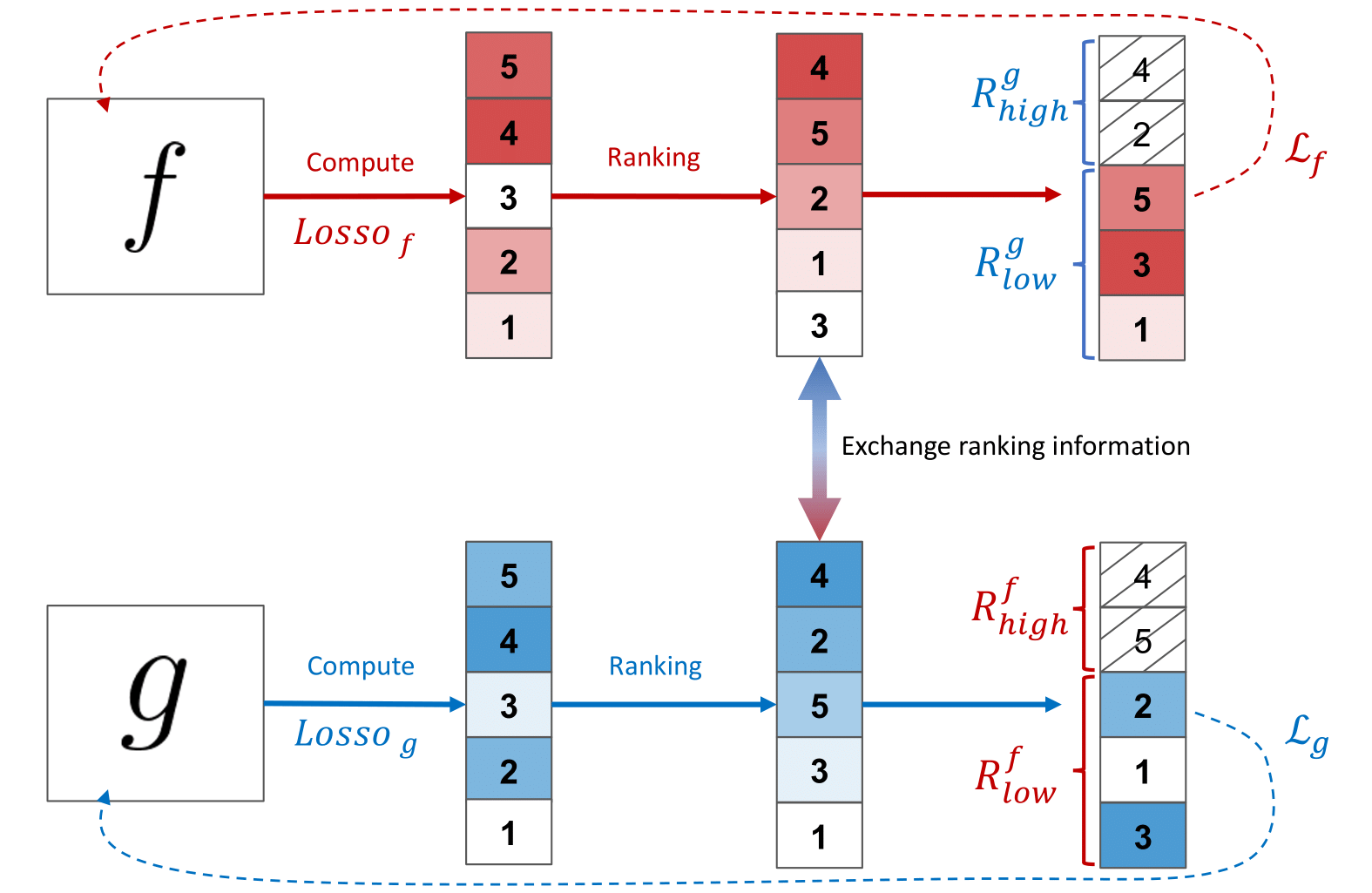

After that, we also published the longer and a wee bit developed version of this paper in the IEEE TNNLS journal. If you want to learn about it, check out the following paper: Multi-Label Noise Robust Collaborative Learning for Remote Sensing Image Classification.

You can find the code under the following Gitlab repository: RCML.

A figure form the RCML paper.

All in all, it was cool to have a Google Scholar page, and I had fun checking my distance to Tim Berners-Lee.